Language Models¶

Note

This was added in version 2.15.0

It’s now possible to run the entire range of supported Large Language Models on CoCalc OnPrem.

You can configure this like all other settings in the “Admin panel” → “Site settings”.

Click on AI-LLM to filter the configuration values.

On top of that, you can limit the choices for your users by only including selected models in list called User Selectable LLMs.

In particular, running your own Ollama server and registering it might be an interesting option.

Note

Just like with all the other admin settings, you can also control their values via your my-values.yaml, in the global.settings dict.

Ollama¶

To tell CoCalc OnPrem to talk to your own Ollama server, you have to set the ollama_configuration site setting.

Assuming your Ollama server runs at the host and port ollama.local:11434, you have to set this in global.settings to access a model named gemma:

ollama_configuration: '{"gemma" : {"baseUrl": "http://ollama.local:11434/" , cocalc: {display: "Gemma", desc: "Google''s Gemma Model"}}}'

You can add more models by adding more dictionary entries.

User Defined Models¶

Note

This is available since version 3.0.3

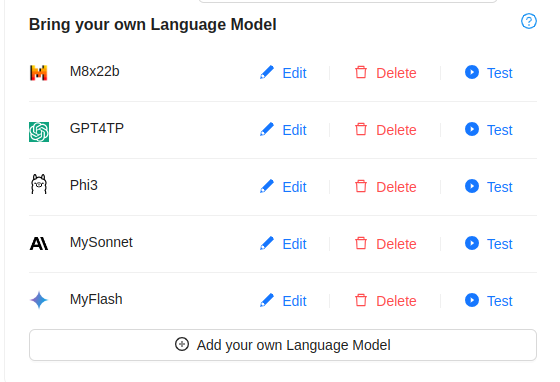

Users are also able to configure their own language model – either from a supported provider in the cloud or one hosted at another endpoint.

This can be enabled/disabled in the Site Settings, under “User Defined LLM”,

or in your my-values.yaml, in the global.settings under user_defined_llm: "yes|no".

With that, in the account settings there is this section: